With this post I wanted to write down some of my thoughts about the nature of consciousness. It’s not meant to be the ground truth but rather a hypothesis.

I’m not going to tell all aspects about it as this would take too long and already the very definition of consciousness varies greatly between people. But let’s consider the part which we deal with everyday: We have the impression that our conscious mind is somehow supervising/controlling our “automatic” behaviour and is somewhat seperate from it. To make a long story short I believe all of this is an illusion and I want to explain why.

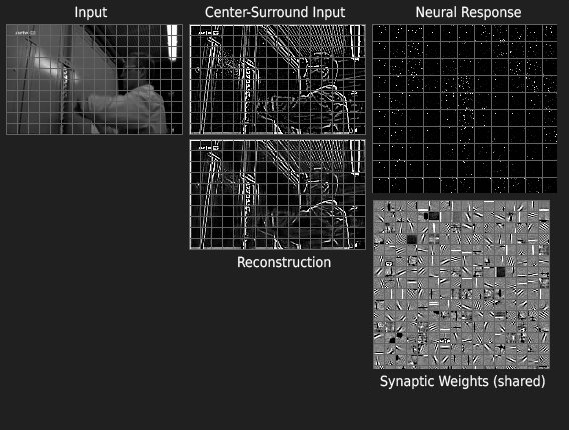

Let’s just consider that the brain is a sort of “machine” which senses its environment by connected sensors of different type (see, hear, touch, smell, taste) and produces behaviour via connected muscles. This “machine” is able to adapt in such a way that it produces behaviour (output) from senses (input) in order to survive and reproduce (which evolved by means of evolutionary pressure).

It adapts by recognizing repeating patterns in the world and behaving in a way which has been beneficial in this situation in the past while avoiding behaviors which were disadvantageous. Being able to recognize repeating patterns also means being able to somewhat predict the future as you may already recognize the beginning of some sequence and simply expect that sequence to evolve the same as it did all the times you experienced it before. Of course there are plenty of details to this, but this is another story.

Now what about consciousness ? I think it is just a consequence of this way of processing. First of all we experience our own behaviour. Let’s assume there is no such thing as a conscious mind. We just learned what is best to do in the situation we’re currently in. We do something and at the same time sense that we’re doing it in a feedback manner – even as infants which are certainly not conscious in the common sense and their behavior is far from optimal, but their behaviour get’s refined every time they do another trial to figure out what works and what doesn’t. As a side note it is worth mentioning that the sensory feedback loop is not the only feedback in higher animals including humans, but these more advanced brains also consist of internal neural feedback loops in order to keep “context”, so something that is out of sight is not immediately out of mind which greatly enhances predictive capabilities.

What happens over time is that we can predict our own behavior in familiar situations just as we can predict the situation itself. Basically our behaviour is just part of the situation (especially for newborns which are not aware that e.g. their arm is part of them – for them it’s not more special than the toy beside it, instead this is learned later on).

And here is the catch: What we call consciousness is simply the ability to predict our own behavior which gives us the illusion of actually causing or being in control of that behaviour which is actually not the case. Consciousness is not necessary for behaviour, but a consequence, a side-effect of how that behaviour is aquired by means of prediction from past experiences and feedback – a completely overrated illusion (at least in that being-in-control-defintion used here).

Usually this illusion is pretty much perfect, but in uncommon situations it sometimes becomes more evident as we simply cannot predict our behavior accurately anymore and in extreme situations it may even yield in a “conscious breakdown” – not being able to predict oneself anymore and therefore completely “loosing control and/or the sense of self” (feeling like driven by an auto-pilot). Fortunately most people will never experience such extreme situations, but the same effect can be produced by interferring with that self-prediction by various methods like drugs, sensory deprivation or brain-damage. All in common are impairment of memory, sensing or prediction capabilities which impairs the prediction of oneself’s behavior giving the perception of loosing control or not being oneself anymore (altered states of consciousness). In the extreme case of being completely incapable of predicting our own actions the ego dissolves out of existence.

I’m pretty sure that the idea that consciousness is needed for normal behaviour is wrong. In a way it’s the other way around. It’s more that not being conscious is a sign of an impaired brain-function, but still an unneccessary illusion. First there was behavior from prediction and sensory feedback and as a result there was the ego- and consciousness-illusion.

But if you now think this means all your “conscious efforts” are futile, because you really are just a machine, you didn’t get the point. 😉