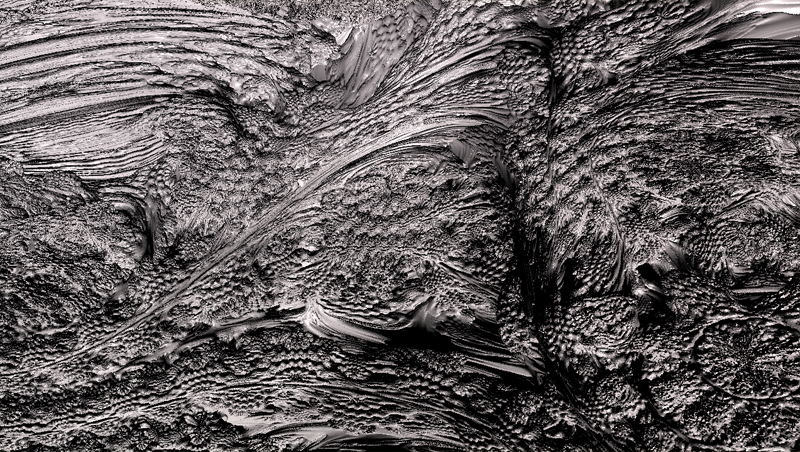

I tried a new way of rendering voxel datasets. This first iteration is just a proof of concept which appears very promising.

The goal I had in mind when starting this project was to be able to render large voxel based game scenes at realtime performance. My approach consists of automatically converting the original voxel data into a set of heightmaps which are structured in space using a bounding-volume-hierarchy (BVH-tree). The actual rendering is done using ray-tracing.

It is still vastly unoptimized, but runs at >60fps already on a single GPU (at a output resolution of 1024^2).

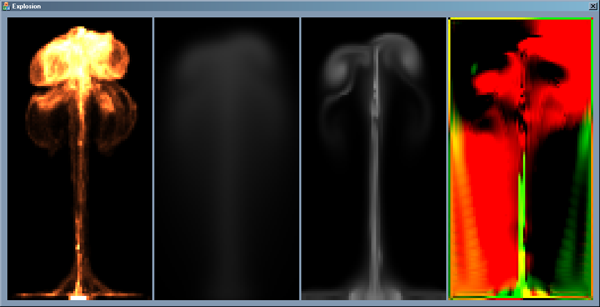

The following rendering shows a fairly simple scene (data consists of about 17mio voxels before conversion).

The differently colored patches visualize the differnet heightmaps used.